- Published on

Optimal Transport and Perfect Matching

- Authors

- Name

- Michael Shi

- Follow me

Why is this important?

The main reason I decided to look into matching algorithms is because of their value in finding the minimal cost in machine learning. This is extremely important because we can use these algorithm to compare distributions of data by posing it as a matching problem. In machine learning, this might be useful for calculating how well a model performs by comparing the Wasserstein distances between the performance on sample distribution and the full distribution.

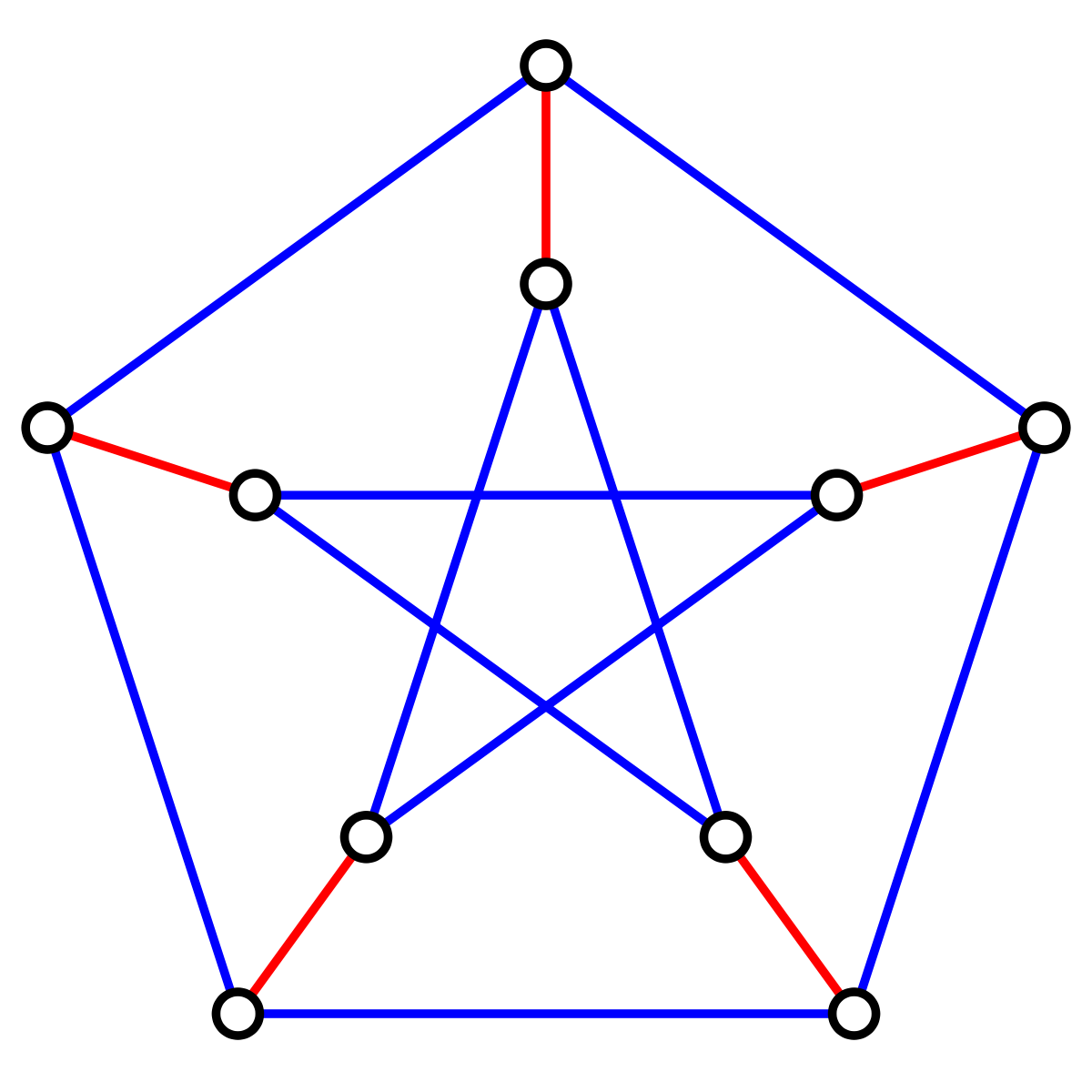

What is a perfect matching:

A perfect matching is a graph where every vertex is matched to another vertex such that all vertices are covered with a single edge

How can we find perfect matchings?

Push-Relabel Algorithm:

We have dual weights for each vertex to maintain some invariants.

We have this function

where

- is the reduced cost

- is the dual weight for vertex x

- and are edges

Invariants:

- all reduced costs 0

- Every is tight

Theorem: If graph M is perfect and the invariants hold, the M is minimal cost

With this theorem we get the following linear program:

We can now iteratively solve for the minimal cost with a slack variable

Application and WGAN

Earth movers Distance

Constraints on marginals

Problem with original GAN architecture is due to the use of KL-Divergence fails to compute distances when the two distribution or non-overlapping. WGAN addresses this by using Earth mover's Distance.

Transport Plan

We can derive the dual formulation of EMD, by making this a maximization instead of a minimization

which satisfies for all . This 1-Lipschitz condition ensures there is smoothness between two distributions on the discriminator corresponding to

To ensure Lipschitz condition on a neural net we bound the weights by clipping them then the slope can't be bigger the magnitude of the clipping.

Wasserstein GAN uses this dual formulation of Earth Mover's Distance

The main difference between Wasserstein GAN and regular GAN objectives is that it uses linear activation instead of sigmoid in the discriminator and removes the logs